A couple weeks ago, I was presented with a solution that was in development, and I volunteered to be a beta tester. I always say YES! to these opportunities, so I embarked in learning a new (yes, again!) platform to perform ML & AI. Read more about the Alteryx + Veritone partnership and the aiWARE tools.

So, why did I do it? Well, first I’m very curious by nature. Second, the demo I saw explained some ML & AI underworld to a basic guy like me. Finally, because it was fully integrated to Alteryx (so I thought I had that part covered). Very nice starting point to this journey.

The example I saw was a part of an Alteryx webinar where @MarqueeCrew and @LeahK (two very well known Alteryx superheroes) were talking, with the video switching faces as they talked. Four or five photos of each were uploaded to train the model (downloaded from Google Images).

Once the model was trained, it ran and voilà! The results show some very curious information:

- It recognized the time where each identified speaker was talking

- It recognized a speaker that was not trained (another Alteryx superhero @CharlieS)

- The model made the transcription of who said what at what time

- What was the expression in the voice when they said it

For those with me so far, let me tell you… if your head is not exploding right now, it will be in a few paragraphs.

My First Test

So far, that was my experience with the demo, so I decided to do it myself (thinking I wasn’t capable of pulling this off myself). Big surprise! I received two Alteryx macros in advance: the aiWARE Input tool and the Run aiWARE tool (accessible on the Gallery).

As an Alteryx enthusiast, the first thing I did was to drop the Run aiWARE tool on to the canvas. I was pleasantly surprised that I was able to sign up for a free account from within the macro, so I did; yes, you can even do it from within the macro, without leaving Alteryx, and receive a free trial, too!

Now that I have my credentials, I was able to log in, and the main interface of the tool was presented:

Select Data: Lists options to retrieve your data:

Upload Type: If you’re going to be uploading a single file or a folder contents:

Project Name: Just a name to keep your projects organized.

Local File: Since I chose to upload a local file.

Flow Options:

Here starts the amazing journey for me. You can use standard workflows, published by Company, or you can use yours, through the Custom Flows Option.

For the Standard Flows, here is a partial list of those available to Alteryx right now:

I decided to try the Facial Recognition first, based on two previous disappointing experiences I had with two other vendors and this kind of model. When I selected Facial Recognition, I was presented with a new drop down, Select Library:

And a very suggestive option to Train my First Library. Obviously I clicked the link.

In the same panel, I was presented with the Libraries section of the solution, where you can build, edit/update/expand or delete libraries for model training.

I started to look into my work laptop and found some pictures of my kids, so I fed the library with them. The process is simple, easy and straightforward, with instructions at every step. It took me more time to find the pictures than training the model with them.

So finally, I selected the library for this test, and ran the workflow (I am a Browse tool guy in development, so I added one first).

The workflow throws a job to the server to start performing the Facial Recognitions. A very important thing to notice is there’s no need to wait until the task finishes, so meanwhile you can work on another things… (yes, I know, bummer, but your boss will love this!)

The execution results:

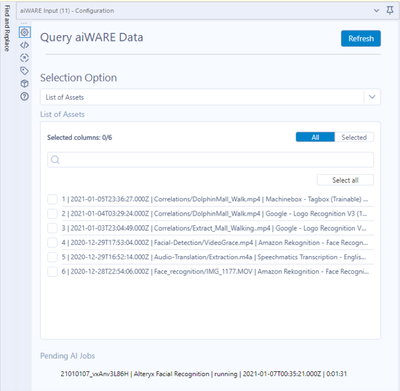

If you’ve been reading carefully, I said I received two tools and now is the turn of the second one to appear in a new canvas. The aiWARE Input tool asked me to log in again, and returned the following:

Selection Option: It allows you to choose from where you’ll get results.

List of Assets: In this case, all the jobs I previously ran.

Pending AI Jobs: Shows what jobs are still running on the server

I ran the second workflow (a pretty basic one, btw). 2.9 seconds for the results; I went directly to the results. It’s JSON, and I’m in Alteryx, parsing is no problem!

JSON Parse to the rescue and after a little simple Alteryx magic, I have readable results:

I’m not going to enter in much detail on the results, because I think you need to test this and be amazed yourself, but I will comment on some of them:

Emotion detection (up to seven emotions tested by frame) and Confidence probabilities of each one:

Twenty-nine face landmarks evaluated per subject and their probability score:

Each person in the library recognized, based on a set of four pictures of each (the one that is blank is my goddaughter, whose picture where not included in the training set):

Part of the video where they were recognized:

And a lot of other results, like if the model detected beards, sunglasses, poses, etc.

Conclusion? It took me 10 minutes from scratch to get the experiment done, starting with picture gathering, video uploading, model training and face recognition completed.

A more complex experiment

Since I was already hooked up with these cool new tools, I decided to get into a real business case. A couple of years ago, a potential customer asked us about feature recognition on shopping mall footage.

What a better way to test it right now!

I got a video online (a walk through the Dolphin mall in Miami that somebody posted on the internet), and decided to test the Logo Recognition Engine.

I strongly recommend that you give this a test, I’m sure it’ll blow your mind as it did to me. If you have any questions, please reply to this article. Read more about the Alteryx + Veritone partnership and the aiWARE tools.